Beginning Steps in Developing an Attitude Instrument

- Review literature in the domain which you wish to measure (e.g., “computer attitudes”).

- Develop a list of categories (subscales [i.e.., constructs]) that you wish to sample from the domain. The domain may be “Computer Attitudes” and the categories (constructs) might be “Ease of Use of Computers” and “Usefulness of Computers in Education.”

- Write an operational definition for each construct.

- Write 8 to 10 items/statements for each construct that you believe measure the operational definition you wrote (e.g., “Computers will help students learn material faster.” might be a statement for the “Usefulness of Computers in Education” construct). Avoid common survey pitfalls when writing your statements. Although the statements for a construct will be similar, they will differ slightly as they should measure different nuances of the construct you are trying to measure.

- Give the statements to at least 5 experts for classification (Content/Face Validity). The panel of experts will attempt to match the statements with their appropriate constructs within the domain. They will also indicate how confident they are in their matches. The statements should be mixed (in other words, don’t put all the statements about a given construct together).

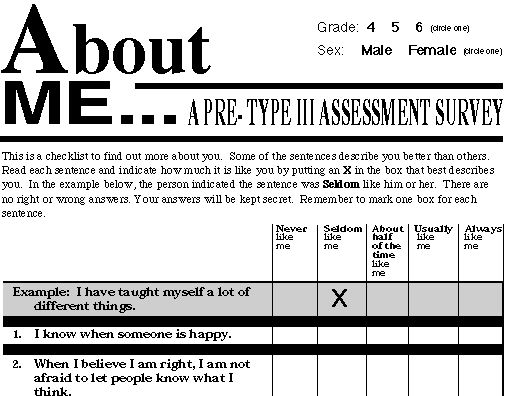

- Develop an instrument with the successfully classified items. Use a Likert scale to design your instrument. You may wish to rewrite some of the items that were not successfully classified. You may remove some of the original items that the content experts found difficult to categorize..

- Field test the instrument (6 to 10 people per item on the instrument) with the populations for which the instrument is being developed.

- Run a factor analysis (exploratory) on the field test responses. More advanced students may wish to do a confirmatory factory analysis.

- Name each factor (category) based on the items which loaded on it (>.40)

- Review whether each item conceptually belongs with its factor (subscale) and remove those which do not.

- Run Cronbach’s Alpha Reliability for each factor/construct (subscale) to investigate internal consistency reliability.

- Modify and retest the instrument if necessary (minimum alpha<.70).

Del Siegle, Ph.D.

Neag School of Education – University of Connecticut

del.siegle@uconn.edu

www.delsiegle.com

Updated 2/15/2021